AI Is Not a Panacea: Why Most Projects Fail and How to Use It Wisely

Alina Ramanenkava, Growth Marketing Manager

Last updated: September 18, 2025

Introduction

Artificial intelligence has been hailed as the new industrial revolution, which is supposed to redefine industries, accelerate innovation, and unlock efficiency. In boardrooms and press headlines alike, it is framed as a technology that can solve almost any problem if deployed quickly enough.

However, the reality is less optimistic. In 2025, Air Canada was taken to court after its chatbot gave misleading information on bereavement fares. This is not an isolated incident, but rather part of a broader pattern: projects stall in the proof-of-concept phase, costs spiral, or outputs prove unreliable when scaled. Reports confirm the scale of the problem: MIT estimates a 95% failure rate for generative AI pilots, RAND puts the figure at up to 80% across AI projects, and S&P Global shows nearly half of initiatives are scrapped before production.

This article takes a clear-eyed look at why so many projects break down, the limits of current systems, and what strategies help AI deliver real value. The focus is on balance: AI is not a panacea, but a tool that works best when paired with human expertise and small, disciplined experiments.

1. From Hype to Reality: Why Most AI Projects Fail

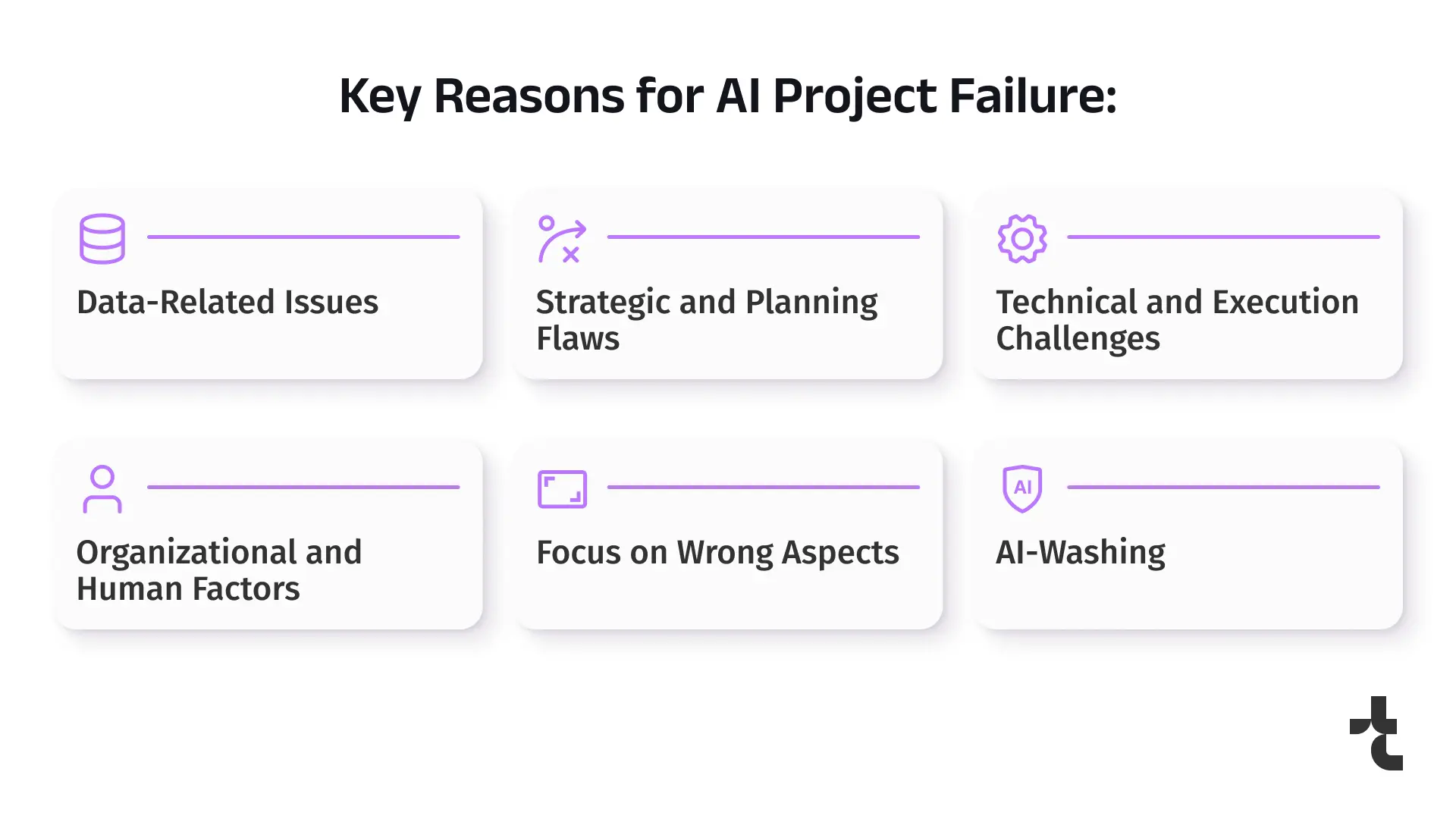

Why do ambitious AI ventures so often crumble before launch? Despite AI’s transformative promise, the reality is that most projects never deliver measurable value. Studies show failure rates ranging up to 90%, underscoring systemic challenges that organizations continue to overlook.

Recent reports underline the scale of the problem. MIT (2025) estimates that 95% of generative AI pilots fail, often due to brittle workflows and misaligned expectations. RAND notes failure rates of up to 80%, nearly double that of non-AI IT projects. S&P Global shows 42% of initiatives scrapped in 2025, sharply up from 17% a year earlier.

Leadership and Strategic Errors

One of the most common reasons AI projects collapse is a lack of alignment between business objectives and AI’s actual capabilities. Leaders, swayed by hype, often deploy AI for problems better suited to traditional methods or overestimate its readiness for complex tasks. This misalignment is compounded by unrealistic expectations of rapid returns, ignoring the significant time and resources required for successful implementation.

This echoes a common trap: chasing “shiny” front-end use cases without addressing core business needs.

Data and Infrastructure Challenges

AI’s effectiveness hinges on data, yet many organizations grapple with poor data quality, insufficient data volumes, or fragmented systems that impede integration. Building robust data pipelines and integrations, ensuring governance, and leveraging cloud infrastructure demand substantial investments and costs that are often underestimated during project planning.

The AI-Washing Phenomenon

Another driver of failure is the growing trend of AI-washing – products branded as “AI-powered” that are little more than conventional software, or worse, smoke and mirrors.

One notorious case from 2025 involved a startup that marketed its chatbot as AI-driven, only for journalists to uncover that the service was actually run by human engineers in India, processing requests manually in real-time.

Stories like this erode trust and waste investment, leaving businesses wary of genuine AI solutions. For companies, the lesson is clear: demand transparency from vendors and validate claims before committing resources.

2. Leadership Mistakes in Artificial Intelligence Projects

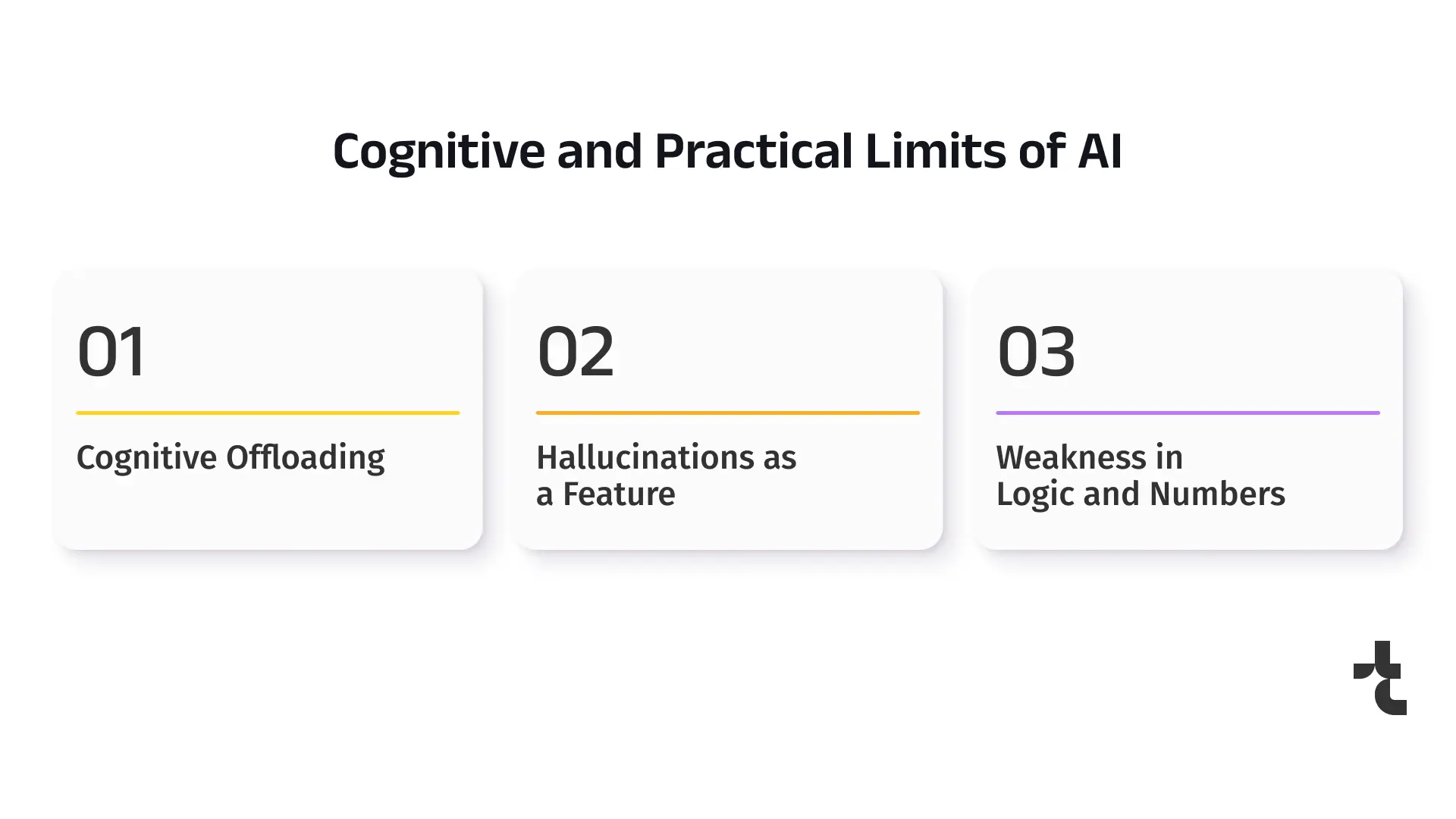

Cognitive Offloading

One subtle risk of everyday AI use is that cognitive offloading can make people rely so heavily on the system that they stop exercising their own judgment. A 2024 MIT study, Your Brain on ChatGPT, found that users who leaned heavily on generative models produced less original work and retained less information, even when they believed the tool was helping them. AI can accelerate drafting and ideation, but overreliance may dull critical thinking.

Hallucinations as a Feature

Generative models are designed to produce fluent language, not guaranteed facts. As a result, they often generate statements that sound plausible but are entirely false. This isn’t a rare “bug”, it’s a systemic property of how large language models work. One well-known issue is fabricated references: systems confidently cite articles, journals, or legal cases that never existed. Without robust fact-checking, these errors can erode trust and cause real-world damage.

Weakness in Logic and Numbers

Another limitation is reasoning. Because models process text as tokens rather than concepts, they struggle with tasks that require structured logic, multi-step deduction, or precise calculations. From basic arithmetic errors to failures in complex problem-solving, the gap between linguistic fluency and true reasoning remains significant. For business use, this means AI can draft convincing narratives but cannot be assumed to “think through” problems in a reliable way.

AI is evolving Boost development speed Discover what’s next

3. Working with AI Wisely

Effective use of AI starts with clarity: define the task, provide examples, and structure the request. While prompt engineering has become a common skill, its importance will likely decline as models evolve. For now, though, clear instructions remain one of the simplest ways to get reliable results. Organizations seeking to transition from experiments to production can outsource AI software development services to ensure solutions are tailored to meet business needs.

No matter how polished the prompt, errors are inevitable. Human review must remain part of the process, but not every task needs the same level of scrutiny. For critical domains such as finance or law, outputs require thorough verification.

For creative or brainstorming tasks, AI can be used more freely with expert judgment filtering results. In operational, low-stakes tasks, spot-checking a sample may be enough. This balance allows speed without sacrificing trust.

4. How to Avoid AI Failures

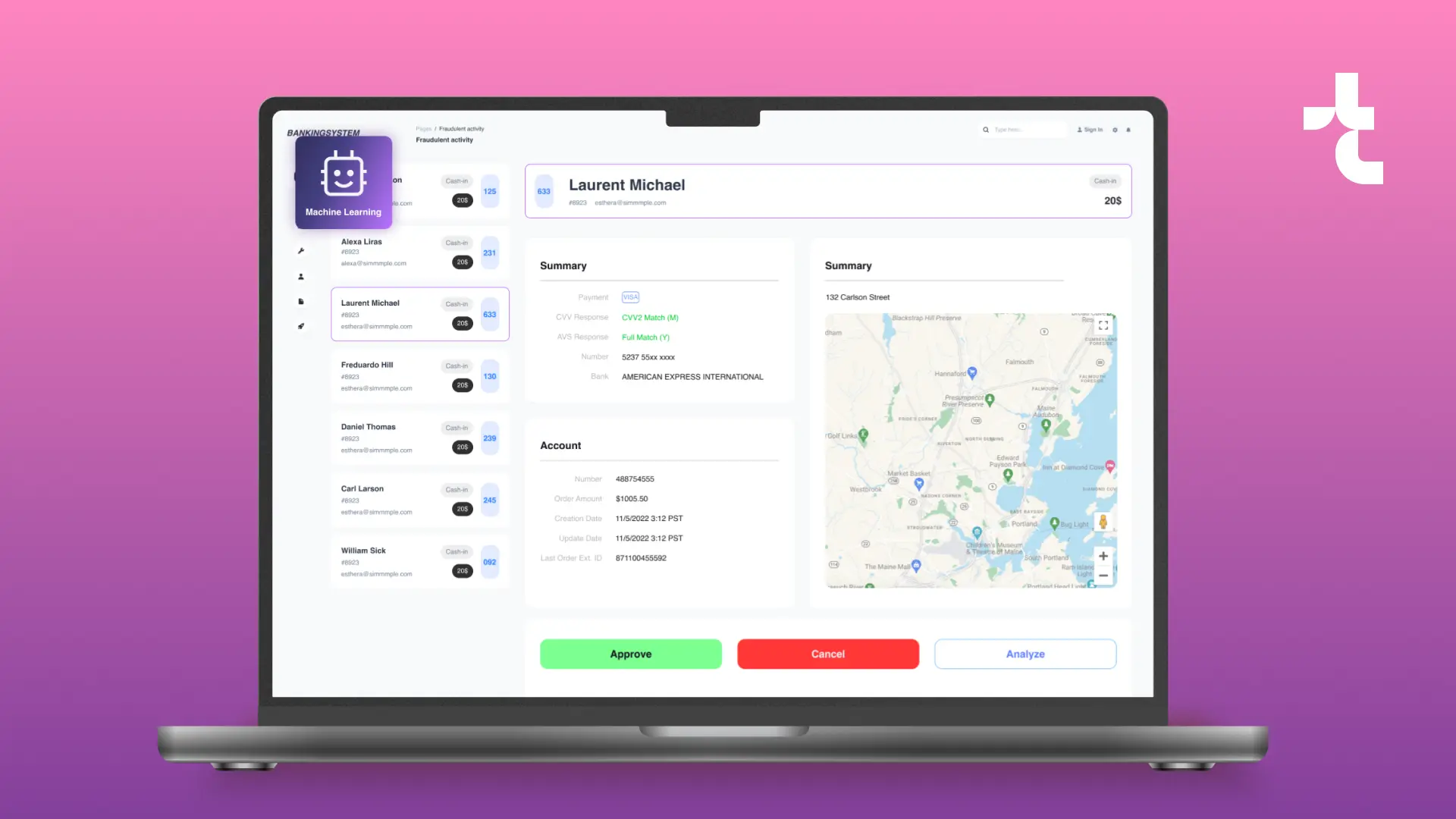

At Timspark, we believe the most effective way to adopt AI is through small, well-scoped experiments rather than risky, large-scale bets. In one such initiative, our team built a lightweight prototype in just ten hours using off-the-shelf tools.

The system automatically processed business conversations, identified customer objections, and highlighted follow-up actions. Benchmarked against human reviewers, it delivered promising results:

- Efficiency: handled a scale of information impossible to manage manually;

- Accuracy: on a benchmark of 10–20 cases, results were comparable to human review;

- Trust: kept humans in the loop, with sales reps validating ~30% of outputs.

Beyond operational efficiency, the experiment revealed blind spots in the service offering itself, insights that would have been invisible without the aid of AI. The takeaway is clear: small, disciplined experiments can surface real value and inform where larger investments make sense.

5. AI as a Co-Pilot, Not an Autopilot

The most effective way to deploy AI is not as a replacement for human intelligence but as a complement to it. When organizations frame AI as a collaborator, a co-pilot rather than an autopilot, they position themselves to capture benefits while minimizing risks.

Co-Pilot, Not Autopilot

AI is best used as an assistant rather than a replacement. It can draft, summarize, and highlight patterns, but final accountability remains with people. The principle is simple: AI extends capacity, while humans ensure judgment and responsibility.

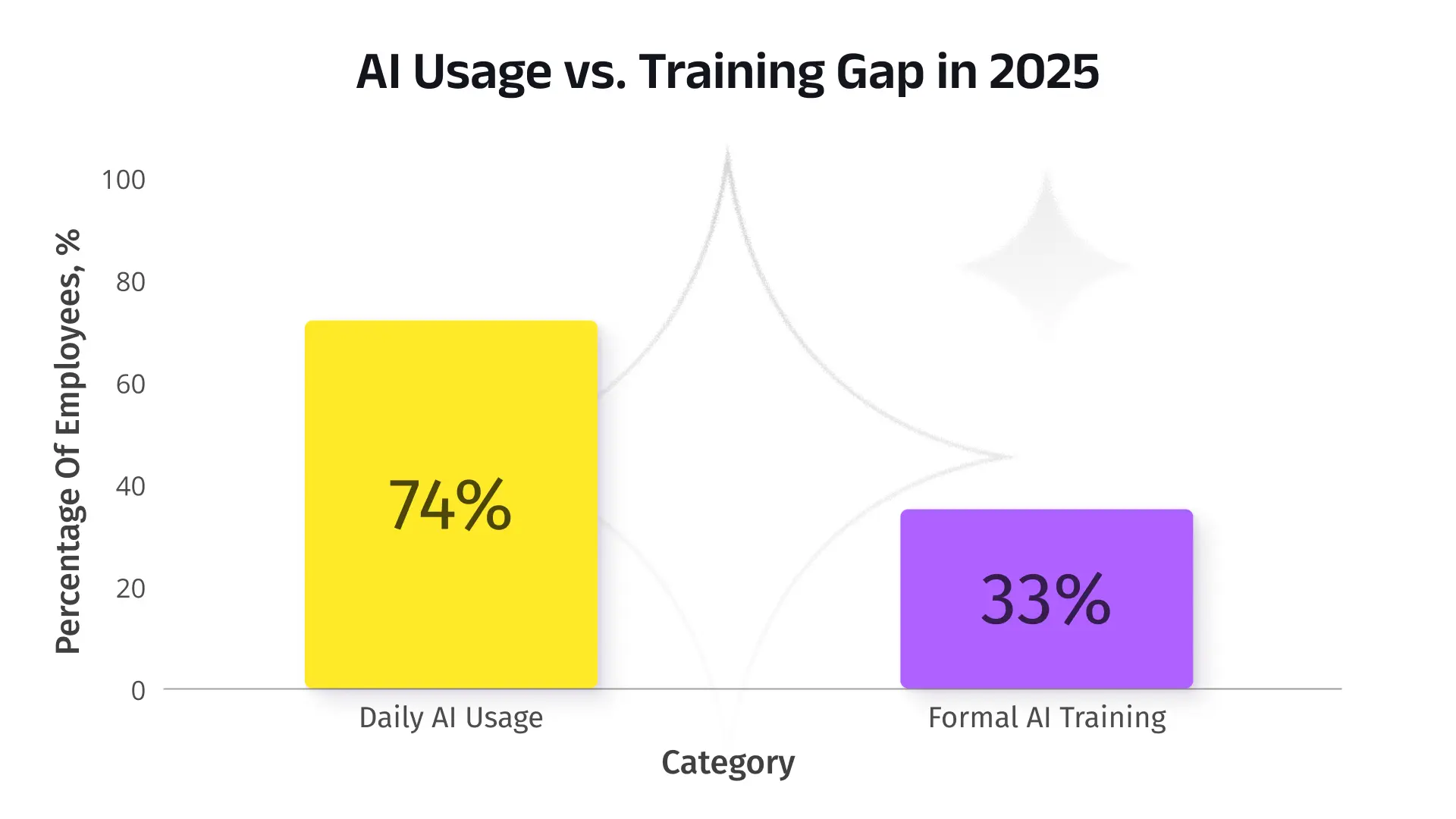

Training and Awareness

A gap persists between the widespread adoption of AI and the level of training employees receive to utilize it effectively. Survey shows that while over 70% of staff experiment with AI at work, only about one-third receive any formal training. This mismatch increases risks of bias, privacy lapses, and misuse. Organizations that invest in basic education (how to frame tasks, where errors occur, how to review outputs) reduce both wasted effort and reputational risk.

Ethics and Oversight

The collaborative model also requires safeguards. Bias in datasets, opaque decision-making, and misuse in sensitive domains (such as policing or hiring) highlight the need for oversight and transparency. Without these, even technically sound tools can undermine trust.

From Failures to Responsible Use

Artificial intelligence is one of the most powerful technologies of our time, but it is not a cure-all. Its value depends on the quality of the data it consumes, the clarity of the strategy behind it, and the willingness of organizations to recognize its limitations.

As evidence shows, most AI initiatives fail — not because the technology lacks promise, but because it is misapplied, overhyped, left unchecked, or deployed without sufficient training. With 95% of pilots failing in 2025, the message is clear: generic approaches lead to generic failures.

The path forward is not to abandon AI, but to adopt it with realism. The future belongs to collaborative intelligence, where AI amplifies human expertise rather than replacing it. Businesses that succeed will be those that pair AI’s scale with human oversight, combine experimentation with governance, and balance innovation with responsibility.

The call to action is clear:

- Invest in training: Bridge the 74%-33% gap to empower your workforce and reduce risks;

- Start small, experiment often: Run lean AI pilots to quickly identify what works (and what doesn’t) before scaling;

- Demand transparency: Vet vendors rigorously to avoid AI-washing pitfalls;

- Use AI for augmentation: Focus on co-pilot models, measuring success through precise KPIs.

By apply these principles every day through small, disciplined experiments that reveal blind spots and unlock ROI for our clients. If you’re considering AI adoption and want to avoid costly missteps — let’s talk.