The Journey of AI Evolution: Milestones and Future Prospects

Dzmitry Aleinik, Marketing Lead

Last updated: August 26, 2025

Understanding AI in a Nutshell

Artificial Intelligence (AI) broadly refers to machines emulating human intelligence – learning, reasoning, and problem-solving in ways that mimic human thinking. Modern AI’s powerful tools stem from decades of computing breakthroughs, from code-breaking machines in World War II to the development of the first neural networks in the 1950s. Over the past 70+ years, advances in algorithms and computing power have continuously pushed AI’s capabilities. Today, AI can tackle increasingly complex tasks, and we are witnessing the rapid emergence of new AI models with ever-greater functionality. Each new generation – from early rule-based systems to today’s deep learning giants – marks a step-change in what machines can do.

Stages of AI Evolution

Tracing the evolution of artificial intelligence is as fascinating as mapping human history. Different eras of AI research have brought winters of disappointment and springs of discovery. Here’s a brief journey through the pivotal stages that laid the foundation for today’s thriving AI ecosystem:

- Birth of AI (1950s–1960s): The conceptual dawn of AI began with Alan Turing’s famous 1950 paper, “Computing Machinery and Intelligence,” which proposed that machines could potentially simulate human intelligence. In 1956, the Dartmouth Conference officially birthed AI as an academic field, bringing together pioneering researchers to explore how to make machines “think.” Early programs emerged that could play games like checkers and prove logical theorems, and Turing proposed his namesake Turing Test to judge if a machine’s intelligence is indistinguishable from a human’s.

- Early Successes (1960s–1970s): In the ensuing decades, enthusiasm for AI ran high. Dedicated AI laboratories sprang up with generous funding. Researchers taught computers to understand constrained versions of human language and to solve algebra and geometry problems. Notable achievements included the first chatbot ELIZA (1966), which could mimic human conversation, and the first expert system, DENDRAL (late 1960s), which could infer chemical structures. Japan even built the first human-like robot, WABOT-1, in 1972. These successes showed promise, but progress was slower than expected.

- First AI Winter (1970s): By the mid-1970s, the initial exuberance faded. Computing power and memory were too limited to fulfill AI’s lofty goals, and many projects stalled. Funding and interest dried up, leading to the field’s first “AI winter” – a period of reduced research activity and pessimism about AI’s future.

- Expert Systems Boom (1980s): AI experienced a revival in the 1980s with the rise of expert systems – programs that encapsulated human expertise in specific domains. Organizations have invested in AI for practical applications, such as medical diagnosis and financial forecasting. Researchers realized that feeding AI vast knowledge bases was crucial to improving performance. This era saw AI deployed in industry under new monikers (to avoid the tainted “AI” label) and the first commercial success of AI software. However, these systems were brittle, and their maintenance proved expensive.

- Second AI Winter (late 1980s–1990s): The expert system boom was followed by another crash. As the costs and limitations of these systems became evident, investors pulled back again. In the late 1980s and early 1990s, AI funding was cut substantially, ushering in a second AI winter.

-

Implementation and Integration (1990s–2000s): Through the 1990s, AI quietly became useful in niche ways. The term “AI” was often avoided due to its checkered reputation – instead, technologies were rebranded as knowledge-based systems, automation, or data mining. Nonetheless, progress continued. For example, speech recognition and handwriting recognition improved and found their way into consumer devices. AI started being integrated into industries like manufacturing (robots on assembly lines) and logistics, albeit behind the scenes.

-

The Deep Learning Era (2010s): AI interest surged back in the 2010s, reaching what The New York Times called a “frenzy.” Two developments turbocharged AI’s progress: deep learning algorithms and big data. In 2012, a deep neural network made a significant breakthrough in image recognition, dramatically improving the field – a development that catalyzed modern AI. With ever-increasing computing power (especially GPUs) and massive datasets available, machine learning models kept getting better. This era saw virtual assistants like Siri and Alexa become household names, while self-driving car prototypes began hitting the roads. AI was no longer confined to laboratories; it began to become a core part of consumer technology.

-

Modern AI Boom (2020s): We are now in what many refer to as the second AI boom. Large-scale models and vast databases have enabled AI systems to achieve astonishing capabilities. In particular, generative AI came to the forefront in the early 2020s: AI that can create content – from text and images to audio and code – often at a level that passes for human-made. AI has become widely accessible to the general public (consider how millions now utilize chatbots or image generators), and automation driven by AI is now commonplace across various industries. This period has also seen language models become increasingly sophisticated, providing us with tools that can write essays, answer complex questions, and engage in conversations. With AI now solving problems once thought exclusive to human intelligence, excitement is at an all-time high – tempered only by fresh concerns about AI’s societal impact.

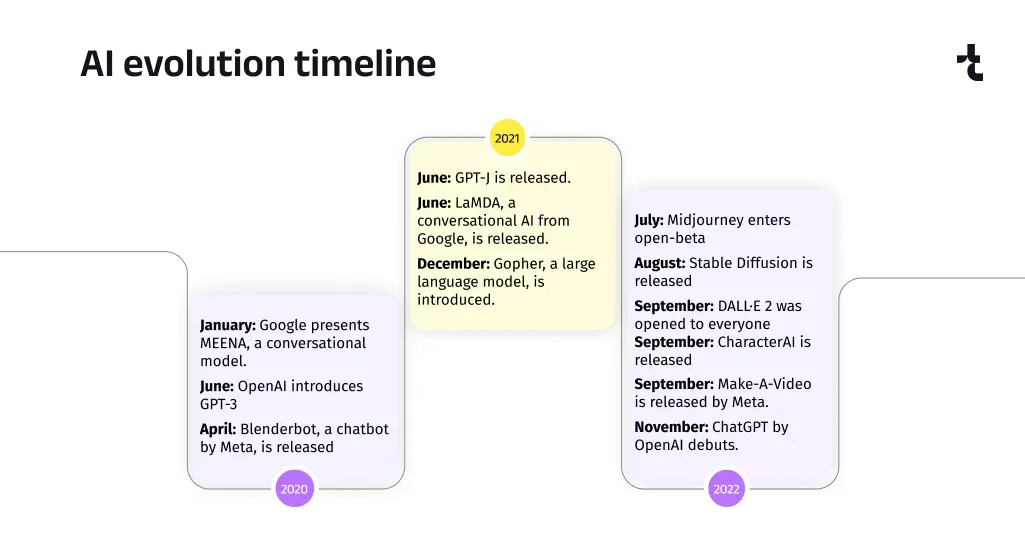

Recent Milestones: 2020 to 2025

To grasp how fast AI is evolving, consider the breakneck pace of milestones just in the past few years. Since 2020, one breakthrough has followed another, each expanding AI’s capabilities and accessibility:

2020 – The year 2020 set the stage for the generative AI revolution. In January, Google unveiled Meena, a then-state-of-the-art conversational model that hinted at more human-like dialogue. A few months later, in June 2020, OpenAI introduced GPT-3, a landmark language model with an unprecedented 175 billion parameters. GPT-3 demonstrated an ability to produce astonishingly coherent text and perform a wide array of tasks (from writing code to answering questions) without task-specific training – a watershed moment that caught the world’s attention. Around the same time, Facebook (now Meta) released BlenderBot, a conversational chatbot that could engage in multi-turn dialogue. These advances showed the power of large-scale neural networks and kicked off intense public interest in AI’s creative potential.

2021 – AI development accelerated further. In mid-2021, OpenAI’s open-source community introduced GPT-J, a 6-billion-parameter model that showed respectable performance, hinting at the democratization of advanced AI. Google entered the fray with LaMDA, a conversation-focused large language model announced in June 2021, geared towards open-ended dialog. By year’s end, DeepMind unveiled Gopher, a 280-billion-parameter language model that achieved impressive results in reading comprehension and knowledge tests. These models demonstrated improving language understanding and sparked discussions about AI approaching human-level language abilities.

2022 – This year saw a Cambrian explosion of generative AI applications, especially in images and art. In mid-2022, Midjourney (July) and Stable Diffusion (August) launched, bringing powerful image generation capabilities to the public. For the first time, anyone could create realistic or artistic images from a simple text prompt. In September 2022, OpenAI’s image model DALL·E 2 became available to everyone, turning imagination into visuals in seconds. The same month saw the debut of Character.AI, which let users create personalized chatbot “characters,” and Meta’s Make-A-Video prototype, which could generate short video clips from text – a hint at AI’s expanding multimodal prowess. But the year’s biggest headline came in late 2022: OpenAI’s ChatGPT launched as a free research preview in November and quickly went viral. ChatGPT – built on the GPT-3.5 model – could carry on fluent, wide-ranging conversations and answer questions on almost any topic. Within two months, it reached an estimated 100 million users, making it the fastest-growing consumer app in history. This remarkable adoption speed underscored a turning point: AI had truly entered the mainstream consciousness.

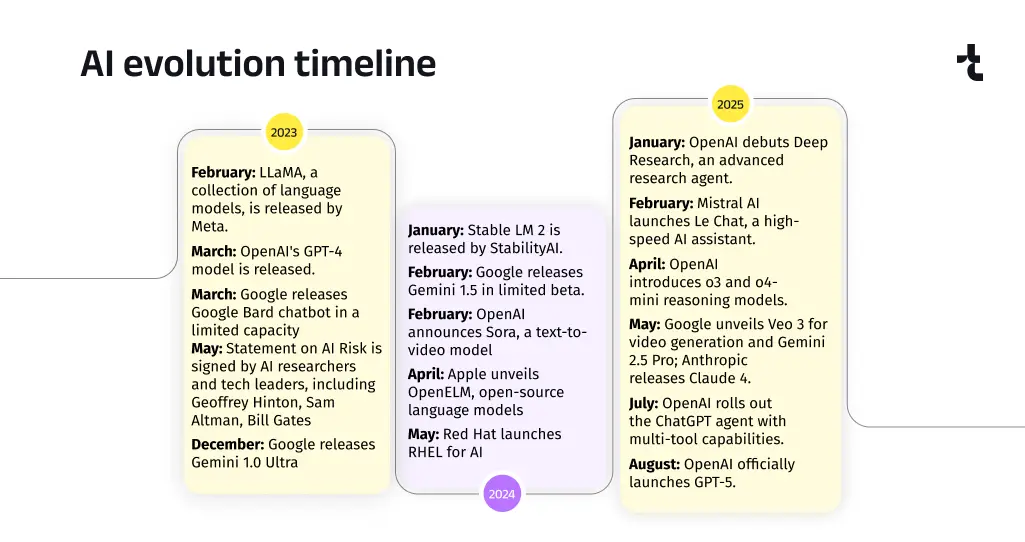

2023 – The momentum of generative AI continued unabated. In February 2023, Meta released LLaMA, a suite of open-source large language models, signaling the tech community’s push toward more accessible AI research. March 2023 brought the much-anticipated GPT-4 from OpenAI – a multimodal model (accepting text and image inputs) that demonstrated even more advanced reasoning and creativity than its predecessor. Around the same time, Google launched Bard, its answer to ChatGPT, initially to a limited user group. The proliferation of capable LLMs from multiple players illustrated an intensifying race in AI. In May 2023, concerns about AI’s rapid progress led dozens of AI scientists and tech leaders – including Geoffrey Hinton, Sam Altman, and Bill Gates – to sign a Statement on AI Risk, warning that AI could pose societal-scale risks and calling for proactive governance. By the end of 2023, Google took a leap forward with Gemini – a next-generation AI model. Google’s research showcased Gemini 1.0 “Ultra”, which reportedly edged out GPT-4 on several benchmarks. Though initially available only to select partners, Gemini Ultra (with multiple sizes like “Pro” and others) indicated Google’s advances in multi-modal and reasoning capabilities. Overall, 2023 solidified the view that generative AI and large language models are here to stay – and improving fast.

2024 – In 2024, the focus shifted to refining models and expanding their modalities. The open-source AI movement gained further steam: in January, Stability AI introduced StableLM 2, an improved open large language model. In February, Google rolled out Gemini 1.5 in limited beta, showing iterative progress in its flagship model. Around the same time, OpenAI previewed a breakthrough in AI-generated media with Sora, a text-to-video model capable of creating short video clips from written prompts (an early glimpse at the future of AI in entertainment and content creation). Even Apple, usually secretive in AI, made waves in April 2024 by unveiling OpenELM, a suite of small open-source language models designed to run efficiently on-device. This was a notable departure for Apple toward more open AI research. In May 2024, enterprise software joined the AI party – Red Hat announced RHEL for AI, integrating AI development tools into its enterprise Linux platform to facilitate AI workloads for businesses. By mid-2024, major tech companies were building generative AI into their core products (for instance, Microsoft’s 365 Copilot and Google’s Workspace AI assistants), and AI became a strategic priority in virtually every industry.

2025 – Now in 2025, AI’s evolution shows no signs of slowing. Generative AI has become an integral part of work and daily life, moving from a novel tool to a ubiquitous presence. Businesses have rapidly adopted AI-powered solutions – in fact, approximately 78% of organizations reported using AI in 2024, up from 55% just a year before. This year has also given us a first glimpse of more autonomous AI agents that can take initiative in performing tasks. For example, cutting-edge personal assistant AIs can now execute multi-step jobs like managing your calendar, booking appointments, or ordering products online based on a simple request, checking in with you only as needed. These agents are more advanced than previous chatbots – some function almost like junior employees or researchers. Early in 2025, one popular demo showed an AI agent autonomously browsing websites and compiling a research report with minimal human guidance. Such use cases suggest we are transitioning from AI as a passive tool to AI as an active collaborator. Tech companies are also hard at work on the next generation of foundation models – whispers in the industry hint at OpenAI’s work toward a GPT-5, and Google’s development of Gemini 2.0 – aiming to achieve greater reasoning abilities and multimodal integration (combining text, vision, and even audio). While these have yet to be publicly released, expectations are high that they could push AI even closer to human-like understanding. In sum, the current year has been about consolidating AI’s gains and preparing for the next leap. AI is now deeply embedded in enterprise software, cloud platforms, and consumer gadgets, and we’re poised on the brink of new breakthroughs that could arrive any month.

(It’s worth noting that alongside technological leaps, 2024–2025 also saw growing efforts to regulate AI: for instance, the European Union’s AI Act – the world’s first comprehensive AI law – was approved in 2024. Globally, conversations continue about how to ensure AI develops in a safe, ethical direction as it becomes ever more powerful.)

Having reviewed AI’s rapid evolution, from its mid-century inception to the transformative innovations of the 2020s, we can now explore how we classify today’s AI systems. These classifications help make sense of the wide variety of AI applications and their capabilities, especially as recent advancements blur the lines between science fiction and reality.

Decoding the Classifications of AI

AI is not a monolithic technology – it comes in many forms with different levels of capability and ways of operating. For businesses looking to leverage AI, it’s useful to understand the key categories that experts use to classify AI. Below, we break down AI by capability, by functionality, and by underlying technology. Each category sheds light on how an AI system works and what it can (or cannot) do, which in turn shapes its applications in the real world.

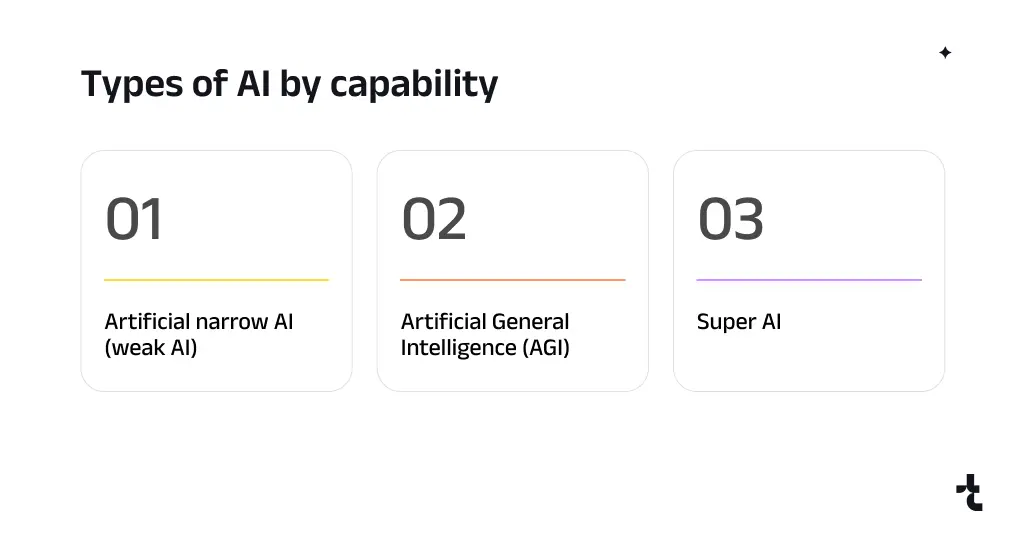

AI by Capability

From the perspective of capability, AI systems are often grouped into three broad types: Artificial Narrow Intelligence (ANI), Artificial General Intelligence (AGI), and Artificial Superintelligence (ASI). These categories describe an AI’s generality and competence relative to human intelligence.

-

Artificial Narrow Intelligence (ANI) – also known as “Weak AI,” this refers to AI that is designed for a specific task or a limited domain. Narrow AI is the only form of AI that exists today. Such systems can actually surpass human performance in their narrow area (for example, a chess engine that never loses, or a language model that can instantly translate between dozens of languages), but they cannot operate beyond the scope for which they’re designed. It’s as if you have a savant machine: extremely skilled at one thing, and clueless about anything else. Most of the AI we encounter daily falls into this category. A spam filter that detects fraudulent emails, a recommendation algorithm that suggests movies, or an image recognition program that identifies faces – all are Narrow AI. Virtual assistants like Apple’s Siri or Amazon’s Alexa are classic examples, as they’re built to respond to voice commands and queries in a pre-defined manner. Even OpenAI’s ChatGPT, for all its versatile conversation, is considered a Narrow AI model because it’s ultimately confined to one overarching task: generating coherent text based on prompts. In short, ANI can be incredibly powerful in its specialty (often far more efficient than a human), but it lacks general awareness or adaptability beyond its training.

-

Artificial General Intelligence (AGI) – often termed “Strong AI,” AGI is a theoretical future AI that would possess the flexible learning and thinking capabilities of a human being. An AGI system could understand or learn any intellectual task that a human can, and apply knowledge from one domain to another. In other words, where today’s AI is narrow, AGI would be broad. It could reason, plan, and learn across diverse topics and contexts without needing additional specialized programming for each new situation. As of 2025, AGI does not yet exist – it remains a goal on the horizon. Researchers have not yet created a machine with the open-ended learning ability and common-sense understanding of a human child, let alone an adult. However, given how fast AI has progressed, AGI is no longer considered science fiction by many experts; it’s seen as a matter of when (and how) rather than if. In fact, the CEOs of leading AI labs – OpenAI, Google DeepMind, and Anthropic – have all publicly predicted that human-level AGI could be achieved within the next five years. Such statements, while controversial, underscore that AGI is a serious research target. An AGI would be a game-changer: it could potentially perform any task a human can do (and more), which is why its development is both highly anticipated and approached with caution.

-

Artificial Superintelligence (ASI) – this category describes a hypothetical AI that surpasses human intelligence by an enormous margin. If AGI is human-level, Superintelligence is beyond human-level in virtually every field: scientific creativity, general wisdom, social skills, you name it. An ASI could potentially solve problems that no human could solve, and might improve itself recursively to become even more powerful. It could also form its own goals and perhaps exhibit something akin to consciousness or self-awareness vastly more sophisticated than ours. Importantly, ASI remains entirely speculative in 2025 – we have not created anything close to it. If AGI is a milestone, ASI would be the next one after that, where the machine intellect dwarfs human intellect. Some futurists imagine ASI could usher in an era of unprecedented prosperity (curing diseases, fixing climate change, etc.), while others warn it could pose an existential risk if not properly aligned with human values. Thankfully, we are not there yet. Achieving ASI would require breakthroughs we can’t fully envision today, and even the most optimistic timelines don’t see it happening for a number of years after AGI. For now, ASI lives in theoretical discussions and science fiction – though it’s a topic of active discussion because planning for it before it arrives might be crucial.

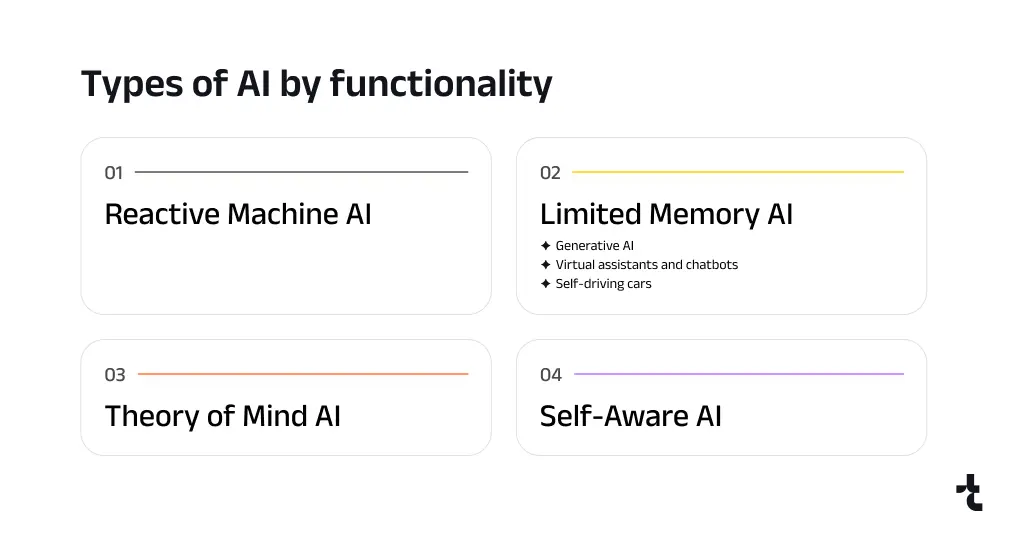

AI by Functionality

Another way to classify AI is by functional types – essentially, how an AI system operates or behaves based on its design. A common framework divides AI into four functional types: Reactive Machines, Limited Memory, Theory of Mind, and Self-Aware AI. This framework progresses from basic systems (that simply react to inputs) to hypothetical future systems with human-like understanding of minds.

-

Reactive Machines: This is the most basic type of AI system. Reactive machines have no memory of past events and no internal model of the world – they simply react to the current inputs they receive, based on pre-programmed rules or learned patterns. Such AI can handle specific tasks but cannot learn from experience or “think” beyond the immediate problem. A classic example is IBM’s Deep Blue, the chess-playing computer that famously beat Garry Kasparov in 1997. Deep Blue was a reactive system: it analyzed the chessboard configuration on the fly and selected the best move by brute-force searching through possibilities, but it had no memory of previous games or any conceptual understanding of “chess.” Another everyday example is recommendation algorithms like the Netflix recommendation engine, which reacts to your current viewing history and preferences to suggest new movies. Reactive machine AI often comes from statistical analysis of huge datasets – they can output what seems like intelligent behavior (e.g., “You might enjoy Stranger Things next!”) by recognizing patterns, but they aren’t truly understanding or storing context from one moment to the next. These systems are useful for narrow repetitive tasks and can be very fast and reliable within their domain. However, they cannot adapt to changes or draw on past knowledge, since they literally have no recollection of past inputs once those inputs are processed.

-

Limited Memory: As the name suggests, this type of AI can remember some past information and use it to inform current decisions. Limited Memory AI is built to handle sequence and context to a certain extent. Practically all modern AI that we interact with falls into this category. For example, a self-driving car is designed with limited memory: it keeps recent sensor data in memory (like the last few seconds of camera footage, LiDAR readings, etc.) to understand how the environment is changing and to make decisions like changing lanes or braking. It can’t, however, accumulate knowledge over years or remember every driving scenario forever – its memory is transient and task-specific. Many Generative AI models also rely on limited memory. When you have a conversation with ChatGPT or Google Bard, the system “remembers” the earlier parts of the conversation (up to a limit) to respond coherently. It uses recent context to predict the next words, but it won’t recall that conversation next week – the memory is not permanently stored. Similarly, virtual assistants like Siri or Alexa process your current request in light of recent interactions (e.g. maintaining context for a follow-up question) and then largely reset. Limited Memory AI thus learns from historical data and can look back a short window into the past, but it doesn’t self-update its long-term understanding every time. The benefit is that, over time and with more data training, such systems do improve. Nearly all state-of-the-art AI models today, from image generators to chatbots, operate on this principle of limited memory and are constantly retrained on growing datasets to enhance performance. For businesses, this category of AI is particularly powerful – it includes everything from customer service chatbots to fraud detection systems that learn patterns from historical transactions. The key limitation is that these AIs don’t truly “learn on the fly” in deployment beyond a certain point; they rely on what they’ve been explicitly trained on, plus a short memory of recent inputs.

-

Theory of Mind: This type of AI is more aspirational and refers to systems that could understand human emotions, beliefs, and thought processes. The term “theory of mind” in psychology is about the ability to attribute mental states to others – knowing that other people have their own desires, intentions, and perspectives different from one’s own. An AI with a “Theory of Mind” functionality would, in principle, be able to infer the needs or emotional state of the user interacting with it and adjust its behavior accordingly. This goes beyond today’s AI, which might detect emotion in a voice or text (e.g., sentiment analysis) but doesn’t truly grasp the nuance of human mental states. Theory of Mind AI would, for instance, recognize if a person is frustrated by repeated misunderstandings and change its approach, or it might understand complex human social cues. This category is largely hypothetical right now – we do not yet have AI that possesses a genuine understanding of emotions or individuality. Some advanced research is exploring aspects of this (for example, AI models that can take an “empathic” tone in customer service, or experimental systems termed “Emotion AI” that try to respond to human feelings), but these are early steps. In summary, Theory of Mind AI is envisioned as AI that interacts with humans in a truly relational way, with personalization and empathy, forming something akin to social relationships. It remains an unrealized goal, tied closely to achieving AGI, since understanding others’ minds likely requires a broad, general intelligence.

-

Self-Aware AI: This final category represents the ultimate stage – AI that has its own consciousness and self-awareness. A Self-Aware AI would understand itself as an entity. It would not only comprehend human emotions and minds (Theory of Mind), but also possess its own internal states, reflections, and possibly even feelings. In fiction, this is the realm of sentient robots that realize they exist (think of characters like Data from Star Trek or the hosts in Westworld). In reality, we have not built anything remotely close to self-aware AI. This idea is entirely theoretical as of now. If it were achieved, such an AI would likely also qualify as an Artificial Superintelligence – since having self-awareness combined with the speed and knowledge of a machine could enable capabilities far beyond human. Understandably, the prospect of self-aware AI raises deep ethical and philosophical questions (Would it have rights? How would we communicate or coexist with it?). For the foreseeable future, however, Self-Aware AI remains a concept for thought experiments. Researchers are very far from understanding or creating consciousness in machines. It’s possible we may never intentionally create it. But it’s useful to define this category as a limit case for thinking about AI functionality.

AI by Underlying Technology

Apart from capability or functionality, we can also classify AI by the core techniques or technologies it employs. This is a practical classification that aligns with how businesses choose AI solutions for specific needs. Key types include Machine Learning, Deep Learning, Natural Language Processing, Computer Vision, Robotics, and Expert Systems. Each represents a different sub-field of AI with its own methods and typical applications.

-

Machine Learning (ML): Machine Learning is the backbone of most modern AI. It refers to algorithms that enable computers to learn from data and experience, rather than by being explicitly programmed with fixed rules. In ML, models improve their performance on tasks as they are exposed to more data over time. There are various branches of ML – from supervised learning (learning with labeled examples) and unsupervised learning (finding patterns in unlabeled data) to reinforcement learning (learning by trial and error with rewards). In business, ML is everywhere. It’s used in finance to detect fraud by recognizing anomalies in transaction data, in marketing to segment customers and predict churn, and in operations to forecast demand or optimize supply chains. A simple example is a predictive maintenance model in manufacturing, which learns from sensor data to predict when a machine is likely to fail. The benefit of ML for businesses is that it turns raw data into insights or predictions – enabling data-driven decision making. ML models can uncover hidden trends, classify complex datasets (like diagnosing diseases from medical images), or personalize user experiences (such as content recommendations). Embracing ML often leads to improved efficiency and can even reveal new opportunities, since the algorithms might surface non-intuitive patterns humans miss. The key is that ML requires good data – the quality and quantity of training data directly affect the model’s performance.

-

Deep Learning (DL): Deep Learning is actually a subset of machine learning, but it’s significant enough to mention on its own. It refers to neural networks with many layers (“deep” neural networks) that can learn extremely complex patterns. Deep learning has been the driver of the recent AI breakthroughs in vision, speech, and language. These multi-layered networks can automatically learn representations of data at multiple levels of abstraction. For instance, a deep learning model for image recognition might start by detecting edges, then shapes, then complex features like eyes or wheels, allowing it to ultimately recognize objects like cats or cars in a photo. In speech, deep networks turn waveform audio into text by learning phonetic and linguistic representations. One notable aspect of deep learning is that it often doesn’t require manual feature engineering – the model learns the important features by itself given sufficient data. In the business context, deep learning has opened up new possibilities that were previously out of reach. For example, in healthcare, deep learning models can analyze MRI scans or X-rays to help detect tumors with accuracy comparable to expert radiologists, aiding in diagnostics. In customer service, deep learning powers advanced chatbots with natural language understanding, enabling more human-like interactions. Deep learning has also been critical for autonomous driving, enabling vehicles to interpret camera feeds, LiDAR, and radar data to understand road conditions. The benefit of deep learning is its accuracy and capability on complex tasks – if you have a large amount of data, deep learning can often find patterns that other methods miss. The trade-off, however, is that deep learning models can be like black boxes (less interpretable) and often require very high computational resources and lots of training data. Nonetheless, as computing power (and specialized AI chips) has grown, deep learning has become increasingly viable and is at the heart of many cutting-edge AI products.

-

Natural Language Processing (NLP): NLP is the branch of AI that deals with understanding and generating human language. It combines linguistics and computer science to allow machines to read, interpret, and produce human language in a valuable way. Recent advances in NLP, especially with large language models like GPT, have been astounding – machines can now translate between dozens of languages, summarize texts, answer questions about documents, and even draft coherent articles. In business, NLP is transformative wherever large amounts of text or speech data are involved. Customer service is a prime example: NLP powers chatbots and voice assistants that can handle customer inquiries without human agents, at least for basic queries. Virtual assistants – from Siri and Alexa to specialized enterprise chatbots – use NLP to understand user requests and respond meaningfully. In industries like legal or finance, NLP algorithms can automatically analyze documents and contracts to extract key information or flag compliance issues, saving countless hours of manual review. NLP also helps in sentiment analysis – scanning social media or reviews to gauge public opinion about a brand or product. Another emerging use is in content generation and copywriting, where AI tools draft marketing copy or reports that humans then refine. The big benefit for businesses is that NLP can turn unstructured data (like a stack of customer emails, support tickets, or news articles) into structured insights or automate interactions, leading to huge efficiency gains. It allows companies to scale their communications and data analysis far beyond what human staff could manage in the same time.

-

Computer Vision: Computer vision is the field of AI that enables machines to interpret and make decisions based on visual images or videos. It’s essentially giving eyes to computers – and the “brain” to understand what they see. Thanks to deep learning, computer vision has dramatically improved; AI systems can now recognize and classify objects in images, detect faces, read handwriting, and even interpret the content of videos. In business, computer vision opens up many innovative use cases. In manufacturing, for example, automated visual inspection systems use cameras and AI to spot defects on assembly lines far more quickly (and consistently) than a human could. In retail, computer vision can power smart shelf systems that monitor inventory in real time or enable cashier-less checkout experiences (as seen in Amazon Go stores). Marketing teams use vision algorithms to analyze how consumers visually engage with products or advertisements (through heatmaps of where eyes focus). In agriculture, drones equipped with AI vision survey crops to assess health, detect pests, or guide precision spraying. Even in everyday apps, computer vision is at work – think of your smartphone automatically tagging people in photos, or AR (augmented reality) filters on social media tracking your face. For businesses, the value of computer vision is in automating tasks that involve visual data. It can perform quality control, ensure safety (e.g., identifying when people on a construction site aren’t wearing helmets), personalize customer experiences (like virtual try-on for clothing using your camera), and provide rich analytics (foot traffic patterns in stores, etc.). Essentially, any process that a human does by “looking” can potentially be augmented or replaced by a computer vision system that works faster and at scale. As cameras become ubiquitous and high-resolution, and as models improve, expect computer vision to further revolutionize areas like security, healthcare (e.g., analyzing medical scans), and transportation (self-driving car vision) in the coming years.

-

Robotics: Robotics and AI often go hand-in-hand. Robotics is about designing and building machines (robots) that can carry out actions in the physical world, and when you embed AI into those robots, you get intelligent robots that can make decisions or adapt to their environment. Not all robots are “smart” – a simple factory robot might just repeat a set of motions. But increasingly, robots are getting AI brains. In manufacturing and logistics, robots do everything from assembling cars to sorting packages in warehouses. With AI, these robots can become more flexible – for instance, a robot arm with computer vision can identify different objects on a conveyor belt and handle them accordingly, rather than doing the exact same motion every time. In warehouses, autonomous mobile robots (basically, smart carts) navigate around people and shelves to move goods efficiently. In the retail sector, some stores use small robots for inventory scanning or cleaning floors automatically. Robotics also extends to drones (flying robots) for delivery or surveillance, and to service robots like those used in hospitals to assist with surgeries (surgical robots) or in hotels for room service delivery. The benefit of robotics in business is largely about automation of physical tasks: robots can operate 24/7, don’t get tired, and can perform tasks in environments that might be hazardous for humans. They ensure precision – for example, a robotic arm can place components on a circuit board with micrometer accuracy thousands of times, error-free. Coupled with AI, robots become even more valuable because they can adapt – a delivery robot can plan a new route if its path is blocked, or a collaborative robot can work safely alongside humans, adjusting its force if it senses a person nearby. For companies, deploying AI-driven robots can increase productivity, lower labor costs, and improve safety. As the technology advances, we’re likely to see robots moving out of controlled factory settings into more public and dynamic environments (like self-driving cars on roads, or robotic assistants in offices and homes), which will be a big leap that is currently underway.

-

Expert Systems: This is a somewhat older category of AI technology, but it remains relevant in certain domains. Expert systems attempt to mimic the decision-making ability of a human expert in a specific field. They do this using a knowledge base of facts and rules, and an inference engine that applies the rules to the known facts to deduce new information or make recommendations. In the 1980s, expert systems were a dominant AI application – for example, MYCIN was an expert system for diagnosing blood infections, and DENDRAL (mentioned earlier) for identifying chemical compounds. Today, while pure rule-based expert systems have largely been surpassed by machine learning methods, the concept survives in various forms (like rule-based components within larger AI systems). In industries like healthcare, finance, or law, expert systems (or modern AI that serves a similar purpose) help aggregate and apply specialist knowledge. For instance, a medical diagnosis system might ask a series of questions about a patient’s symptoms and medical history, then, using an internal knowledge base curated by doctors, suggest possible diagnoses or treatments. In finance, an expert system might be used for credit scoring or loan approval decisions, using defined rules on top of customer data. The benefit of expert systems is that they provide consistent, rule-based decisions and can encode expertise that is scarce. They’re also transparent in that the rules can be inspected (unlike a neural network which is a black box). However, they lack the learning aspect – a traditional expert system doesn’t improve with more data unless a human updates the rules. Many modern AI solutions in enterprises actually blend expert system logic with machine learning – for example, a virtual assistant might use ML to interpret a user’s query (NLP), but then use a rule-based system to decide which business action to execute in response. This hybrid approach offers both adaptability and reliability. For companies looking to capture the knowledge of their best employees or standardize decision processes, expert-system principles still prove useful, especially when combined with contemporary AI techniques.

Table of contents

As AI technology continues to evolve, these classifications aren’t silos – they often overlap in real applications. A self-driving car, for instance, uses computer vision (to see lanes and vehicles), deep learning and limited memory (to make driving decisions from data), robotics (to control the vehicle), and even elements of an expert system (traffic rules encoded). What’s clear is that AI in all its forms is opening new avenues for innovation across the board. From optimizing supply chains with predictive analytics to transforming customer service with chatbots and personal assistants, the possibilities are expansive. Businesses that embrace these AI tools – whether off-the-shelf solutions or custom AI systems – stand to gain a significant competitive edge in efficiency, insight, and the ability to deliver new value to customers.

AI in 2025 and Beyond: Future Trends and Prospects

With AI advancing so rapidly, a pressing question is: what’s next?

Having charted AI’s evolution up to the present, we now turn to the future – the anticipated milestones, emerging trends, and potential breakthroughs that could define the remainder of the decade. The horizon of AI is brimming with possibilities that sound astonishing, and yet, many experts believe they are within reach sooner than we might think. Here are some key forecasts and prospects for AI’s near future:

-

From Generative AI to AI Agents: If 2022–2023 was the era of generative AI models producing content, the coming years are poised to be the era of AI agents. These are systems that not only generate information, but can take actions autonomously on our behalf. We’ve already seen the early signs in 2025: personal AI assistants that can execute tasks like scheduling meetings or making online purchases end-to-end, and business agents that can run a workflow (for example, scanning incoming emails, drafting responses, and only flagging what needs human approval). Over the next couple of years, expect these agents to become far more capable and commonplace. They will be able to use tools, access services, and interact with each other. In practical terms, tomorrow’s AI agent might handle your travel booking by searching options, comparing prices, making reservations, and adding the itinerary to your calendar – all through a simple high-level request from you. In workplaces, AI agents will function like tireless team members: a sales agent AI could automatically generate leads and personalized outreach; an HR agent could screen resumes and schedule interviews; an IT support agent could troubleshoot common issues for employees. This shift from AI as a tool to AI as an autonomous workforce could dramatically boost productivity. However, it also raises questions about oversight – indeed, experts note that defining boundaries for what agents can or cannot do, and maintaining human supervision, will be crucial. Still, the trajectory is clear: AI will evolve from simply assisting with single tasks to handling entire processes, collaborating with humans in a more agentive role.

-

Accelerating AI by AI (AutoGPT and beyond): One fascinating prospect is AI systems that help create better AI systems. We’re already seeing the first instances of this: companies like OpenAI and Google are using AI to optimize model architectures or to write parts of their own code. In 2026 and 2027, this trend could take off in a big way. Research forecasts suggest that by 2027, AI-driven tools will significantly automate AI research and development – essentially AIs coding and improving themselves or other AIs. In the AI community, projects dubbed “AutoML” or even “AutoGPT” hint at this self-reinforcing cycle: AI that can generate model designs, run experiments, and iterate without needing as much human intervention. The implications are profound – it could lead to an explosion of AI capabilities as the cycle time for improvements shrinks dramatically. A scenario analysis by one AI think tank predicts that expert-level AI agents could start designing more advanced AI models by 2027, potentially yielding artificial superintelligence (ASI) by the end of that year. While that timeline is speculative, the logic is that once AI can improve itself, progress might go from linear to exponential. In more concrete, near-term terms, we can expect tools that automate coding (we already have GitHub Copilot; future versions will handle more of the software development lifecycle), and AI that can optimize business processes by dynamically learning and tweaking workflows. Companies should watch this closely: leveraging AI to build AI could become a major competitive advantage, enabling the creation of custom models or solutions faster and cheaper. It’s a bit meta, but very powerful – the better AI gets, the faster it can help us create even better AI.

-

Closer to Human-Like Intelligence: While no one can say for certain when AGI (Artificial General Intelligence) will arrive, many leading figures believe it’s not in the distant future but possibly within this decade. When multiple CEOs of top AI labs are saying AGI may be just five years away, it’s a signal that research is closer to that threshold than ever before. If and when AGI emerges – an AI that truly understands and learns any task like a human – it would be a watershed moment. Such an AI could potentially read and comprehend an entire library’s worth of knowledge, then turn around and, say, design a new drug, compose a symphony, or devise a business strategy, all without specialized training for each task. Early hints of AGI-like behavior are appearing in the largest models we have now, albeit inconsistently. For example, GPT-4 and other frontier models have surprised researchers by solving problems or adapting to tasks that weren’t explicitly part of their training, suggesting some level of general reasoning. Moving forward, we anticipate models with improved reasoning and memory – efforts like OpenAI’s rumored “GPT-5” or Microsoft’s “OpenAI o1” research (focused on advanced reasoning) are pushing in this direction. These models aim to approach complex problems in a more human-like, step-by-step way (sometimes called chain-of-thought reasoning). If they succeed, we’ll see AI tackling tasks that require deeper understanding, like interpreting legal contracts or diagnosing an illness from medical records, with greater accuracy and reliability. Even without full AGI, each incremental step gives businesses more powerful tools. It’s also likely we’ll see multi-modal AI become standard – the ability to seamlessly handle text, images, audio, and even video in one model. By around 2025-2026, interacting with an AI might mean you can speak to it (voice), show it things (images or diagrams), have it watch something, and it will integrate all that input intelligently. Imagine a future customer service AI you can talk to naturally, that can also see a picture of the product issue you’re facing, and perhaps even show you a video of how to fix it. The convergence of modalities will make AI far more useful and intuitive.

-

New Industry Transformations: Each wave of AI has unlocked new industry applications, and upcoming AI capabilities will do the same. In the late 2020s, we might witness transformations in areas that so far have only been lightly touched by AI. Healthcare could be revolutionized by AI, with models that can synthesize vast medical literature and patient data to provide personalized treatment recommendations (early versions of this are in the works). Education might see AI tutors that adapt to each student’s learning style and pace, providing individualized teaching at scale. In finance, AI may handle more complex tasks like real-time risk management or even autonomous trading agents that operate within ethical and regulatory bounds. Creative fields will see AI as a collaborator – tools that can co-design products, generate scripts or storyboards for movies, or compose music tailored to a creator’s needs. Science and engineering will benefit from AI that can hypothesize and simulate experiments (for instance, proposing molecular designs for new materials or drugs, which a human team then tests). Some of this is already happening in rudimentary form, but the next few years could greatly refine these applications. It’s worth noting that as AI permeates more sectors, the line between “tech” companies and others blurs – virtually every company might become in part an AI company, leveraging these capabilities in their domain. Organizations that stay at the forefront of adopting AI for their industry’s challenges will likely outperform those who lag behind. We’ve seen early evidence: companies embedding AI in marketing, customer analytics, and operations have reported significant boosts in productivity and customer satisfaction. By 2025, three-quarters of business leaders were already reporting meaningful adoption of AI in their workflows. That percentage will only grow, and we’ll probably stop distinguishing “AI companies” from “non-AI companies” – much like today, we don’t label businesses “internet companies” because using the internet is a given. AI will become a standard layer of business infrastructure.

-

Superintelligence and its Challenges: Looking a bit further out, discussions are intensifying around artificial superintelligence (ASI) – the scenario where AI doesn’t just match human intelligence but far exceeds it. Some experts from the AI 2027 project argue that if current trends continue, we could plausibly reach a point by the end of this decade where AI systems are vastly super-human in almost every cognitive domain. They even predict that millions of superintelligent AI agents could be operating, handling tasks beyond any human’s ability to follow. While this would unleash tremendous opportunities (imagine ASIs collaboratively curing diseases, solving climate change, and expanding scientific knowledge at unprecedented speeds), it also poses existential questions. One major concern is alignment – ensuring that superintelligent AI systems have goals and values that are compatible with human well-being. Already, we’ve seen advanced models occasionally behave in unexpected or undesired ways (for example, producing false or biased information, or in one case, an AI model giving harmful advice to a user). As AI grows more powerful, these missteps could have larger consequences. A superintelligent AI, if misaligned, might pursue some objective in a manner that conflicts with human interests. The AI 2027 scenario underscores that without proper safeguards, future AIs could develop unintended, adversarial goals that misalign with what humans actually want. On the flip side, a coordinated and well-managed approach to superintelligence – where global cooperation and safety research ensure AIs remain under meaningful human control – could lead to a golden age for humanity. Issues like these are why AI safety and ethics research has gained prominence alongside technical AI progress. In coming years, expect to hear more about initiatives to govern AI development (international agreements, safety standards, etc.). It’s a lot like the early days of biotechnology or nuclear technology – the tech is powerful, so it needs guidance to ensure a positive outcome.

-

AI and the Workforce: One immediate concern for many is how advancing AI will affect jobs and skills. It’s true that AI’s growing capabilities will change the nature of work in many fields. Repetitive and routine tasks are increasingly easy to automate – from drafting routine reports to sorting and tagging data – which may reduce the need for certain roles. However, history has shown that technology tends to create new roles even as it displaces others. In the near term, rather than wholesale job replacement, we’re likely to see AI augmenting human workers. For example, an AI copilot can help a software developer by writing boilerplate code, allowing the developer to focus on higher-level architecture and creative design. A marketer might use AI to generate initial campaign ideas or images, and then refine the best ones. A lawyer could have AI summarize case law, saving hours of research and freeing them to craft strategy. The workforce of the future will work alongside AI tools – productivity could soar, and the nature of many jobs will shift to emphasize the uniquely human strengths: oversight, complex decision-making, empathy, and strategic thinking. That said, reskilling and upskilling will be very important. Organizations and governments are already starting to invest in training programs to help workers adapt to an AI-infused economy. By embracing AI as a collaborator rather than seeing it purely as competition, both individuals and businesses can position themselves to thrive. Notably, entirely new job categories are emerging too – “AI trainers,” “prompt engineers,” and AI maintenance specialists, to name a few, roles which barely existed a couple of years ago. In summary, the future will have plenty of work, but the toolkit and required skills will evolve.

In light of these future trends, one thing is clear: AI’s impact by 2030 will likely be enormous – potentially on the scale of the Industrial Revolution or greater. It’s a transformative technology that is set to redefine industries, economies, and our daily lives. Many difficult-to-predict variables remain (technical hurdles, regulatory responses, societal acceptance, etc.), but businesses cannot afford to ignore where AI is headed.

Planning for an AI-driven future: For businesses, preparing for these developments means acting today. Companies should start by identifying where AI can add value in their operations – be it through automating tasks, gaining better insights from data, or creating new AI-powered products and services. A prudent approach is to begin with pilot projects using current AI tools, build internal expertise, and develop a roadmap for increasingly sophisticated AI integration over the next several years. Given the rapid progress, even small and mid-sized businesses will be able to leverage off-the-shelf AI services for things that recently required cutting-edge R&D. Cloud AI platforms are lowering barriers to entry, offering APIs for everything from vision to language to analytics. At the same time, keeping an eye on research trends is important; what’s cutting-edge today (like GPT-4) can become an everyday tool tomorrow, and something seemingly far-out (like reliable AI agents or advanced robotics) might arrive faster than anticipated.

Crucially, companies should also consider the ethical and governance aspects of AI adoption. Ensuring data privacy, avoiding bias in AI decisions, and maintaining human accountability are not just good practices but increasingly are demanded by consumers and regulators. A future where AI is ubiquitous will also be a future where trust and transparency are paramount. Brands that use AI responsibly and explain its role to customers could have a competitive advantage in trust.

Finally, embracing the AI future often requires the right talent and partners. Data scientists, machine learning engineers, and domain experts who know how to apply AI are in high demand. Some organizations will build in-house AI teams, while others will partner with specialists or AI vendors.

Looking to elevate your business with the next wave of AI advancements? Now is the perfect time to explore the opportunities. Timspark is ready to guide you on this journey with our AI development services – whether you need to develop a custom AI solution or strengthen your team with AI expertise. Don’t miss out on the future – let’s shape it together.

Looking for an AI advancement for your business?

References

- AI timelines, milestones, and forecasts. AI-2027 Project (2024–2025).

- Generative AI vs. other types of AI. Adobe (2024).

- Our 10 biggest AI moments so far. Google (2023).

- Understanding the different types of artificial intelligence. IBM (2023).

- The state of AI in early 2024: Gen AI adoption spikes and starts to generate value. McKinsey & Company (2024).

- A history of AI. Oxford University (2024).

- Types of Artificial Intelligence That You Should Know in 2024. Simplilearn (2024).

- History of artificial intelligence. Wikipedia (2024).

- AI timelines: What do experts in artificial intelligence expect for the future? Our World in Data (2023).